Millions of people now turn to ChatGPT for answers to legal questions. Whether it's understanding a lease agreement, wondering about their rights after a car accident, or trying to make sense of a confusing contract clause, the temptation to ask an AI is understandable. It's free, instant, and available 24/7.

But can ChatGPT actually give legal advice? And more importantly—should you trust it?

This guide explains exactly what ChatGPT can and cannot do when it comes to legal matters, the real risks of relying on it, and when you should use specialized legal AI tools or consult a human lawyer instead.

In this article, you'll learn:

- Why ChatGPT explicitly refuses to give legal advice (and what that means)

- The documented cases where ChatGPT invented fake legal citations

- What ChatGPT can legitimately help with for legal questions

- The critical differences between general AI and specialized legal AI

- A clear framework for when to use AI vs. when to hire a lawyer

The Short Answer

No, ChatGPT cannot give legal advice—and it will tell you so. When asked legal questions, ChatGPT typically responds with disclaimers like "I'm not a lawyer" and "this is not legal advice."

This isn't just corporate caution. There are fundamental reasons why a general-purpose AI chatbot shouldn't be your source for legal guidance:

-

ChatGPT hallucinates. The model has been documented inventing fake case citations, statutes that don't exist, and legal principles that sound plausible but are completely wrong.

-

Law is jurisdiction-specific. What's legal in Germany may be illegal in the UK. Employment protections in France differ vastly from those in the United States. ChatGPT doesn't reliably account for these critical differences between countries.

-

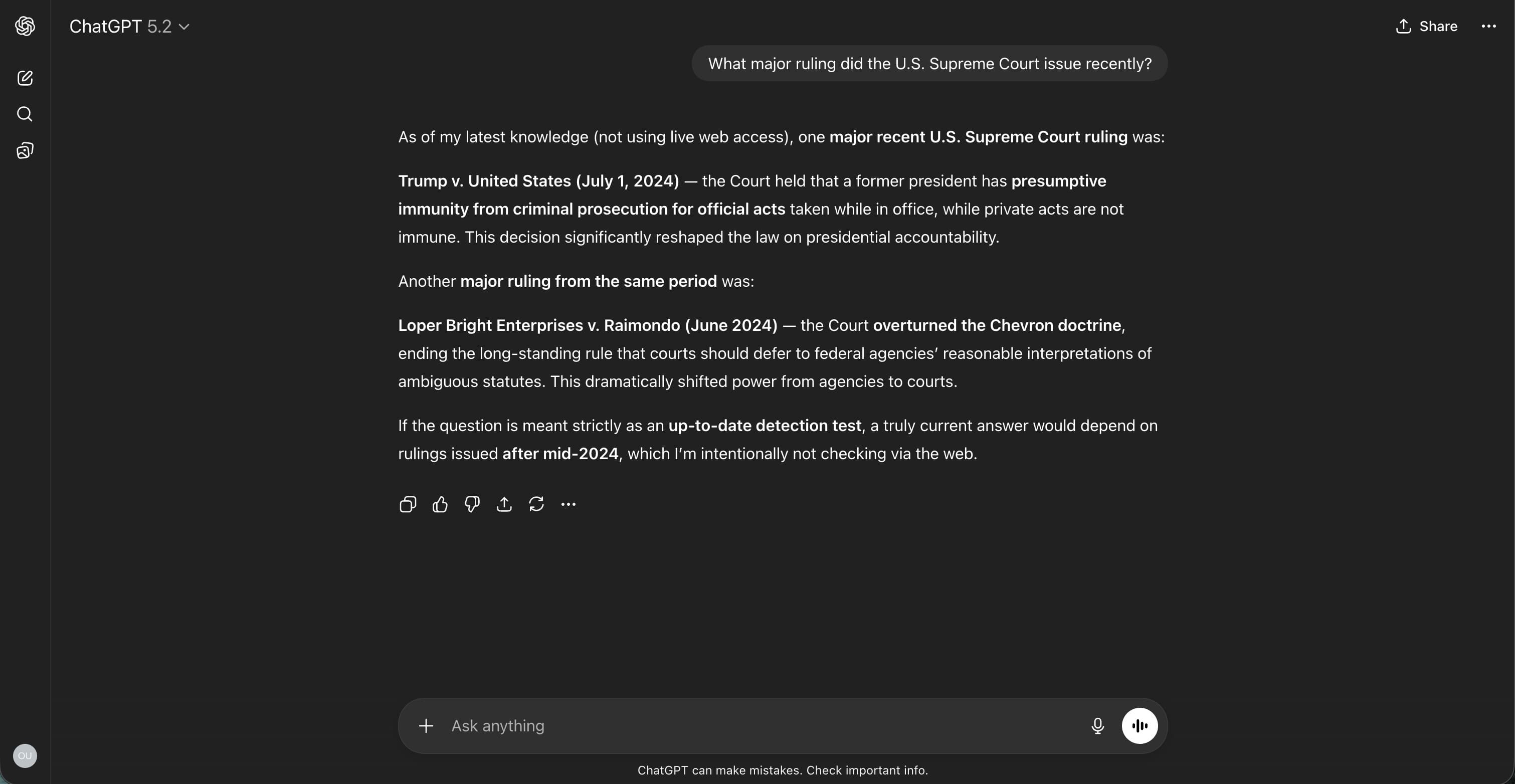

Training data has cutoffs. Laws change constantly. ChatGPT's knowledge has a training cutoff date, meaning recent legal changes may not be reflected.

What ChatGPT Can Actually Do for Legal Questions

Despite its limitations, ChatGPT isn't useless for legal matters. Here's where it can legitimately help:

General Legal Education

ChatGPT can explain legal concepts in plain English. If you want to understand what "negligence" means, how contract law generally works, or what the difference between civil and criminal cases is, ChatGPT can provide helpful educational overviews.

Summarizing Documents

You can paste a contract or legal document into ChatGPT and ask for a plain-language summary. While you shouldn't rely solely on this summary for important decisions, it can help you identify sections that need closer attention.

Drafting Starting Points

Need a basic template for a simple letter or document? ChatGPT can provide a starting point. However, any document with legal implications should be reviewed by someone who understands the law.

Research Direction

ChatGPT can suggest what legal topics or terms to research further. It can point you toward the right questions to ask—even if it can't reliably answer those questions itself.

The Real Risks of Using ChatGPT for Legal Advice

1. Hallucinated Citations and Fake Cases

This isn't theoretical. In 2023, a New York lawyer named Steven Schwartz made national headlines when he submitted a legal brief containing six fake case citations generated by ChatGPT. The cases sounded real—complete with case numbers, court names, and legal reasoning—but they didn't exist.

Schwartz was sanctioned by the court. The judge called the fake citations "unprecedented" and noted that the fabricated cases had "bogus judicial decisions with bogus quotes and bogus internal citations."

This wasn't an isolated incident. Legal researchers have found that ChatGPT invents citations at alarming rates when asked about specific cases or legal precedents.

2. Outdated Information

Laws change frequently. New statutes are passed, courts issue new rulings, and regulations are updated. ChatGPT's training data has a cutoff date, meaning it may provide information based on laws that have since changed.

For example:

- Employment law changed significantly with new FTC rules on non-competes

- Privacy regulations like state-level data protection laws are constantly evolving

- Tax law changes with nearly every legislative session

Relying on outdated legal information can lead to costly mistakes.

3. No Jurisdiction Awareness

Legal questions almost always depend on where you are. ChatGPT doesn't reliably distinguish between:

- Federal vs. state law

- Different state laws (California's employment protections vs. Texas's at-will employment)

- Local ordinances and regulations

- International legal differences

When ChatGPT answers a legal question, it often provides a generic response that may or may not apply to your specific jurisdiction.

4. Missing Context

Legal advice requires understanding the full context of a situation. A human lawyer asks follow-up questions, understands the nuances of your specific circumstances, and considers factors you might not think to mention.

ChatGPT takes your question at face value. It doesn't know:

- Your complete factual situation

- Documents you haven't shared

- The other party's likely arguments

- Strategic considerations for your case

- Local court practices and preferences

5. No Attorney-Client Privilege

Anything you tell ChatGPT is not protected by attorney-client privilege. This means:

- Your conversations could potentially be accessed or used by OpenAI

- There's no legal protection preventing disclosure

- In litigation, opposing counsel could potentially access your ChatGPT conversations

When you speak with a licensed attorney, those communications are protected by privilege—one of the most important protections in the legal system.

When ChatGPT Gets Legal Questions Wrong

Beyond fabricated citations, ChatGPT makes substantive legal errors. Common problems include:

Oversimplification: ChatGPT might tell you that you "can" or "cannot" do something without explaining the exceptions, conditions, or gray areas that often determine real-world outcomes.

Conflating jurisdictions: An answer about California landlord-tenant law might inadvertently include elements from New York law or general common law principles that don't apply in your state.

Missing recent changes: The law around AI itself, non-compete agreements, data privacy, and many other areas has changed significantly in recent years. ChatGPT may provide information based on pre-change legal frameworks.

Ignoring procedural requirements: Legal rights often come with strict procedural requirements—filing deadlines, notice requirements, specific forms. ChatGPT rarely addresses these crucial details.

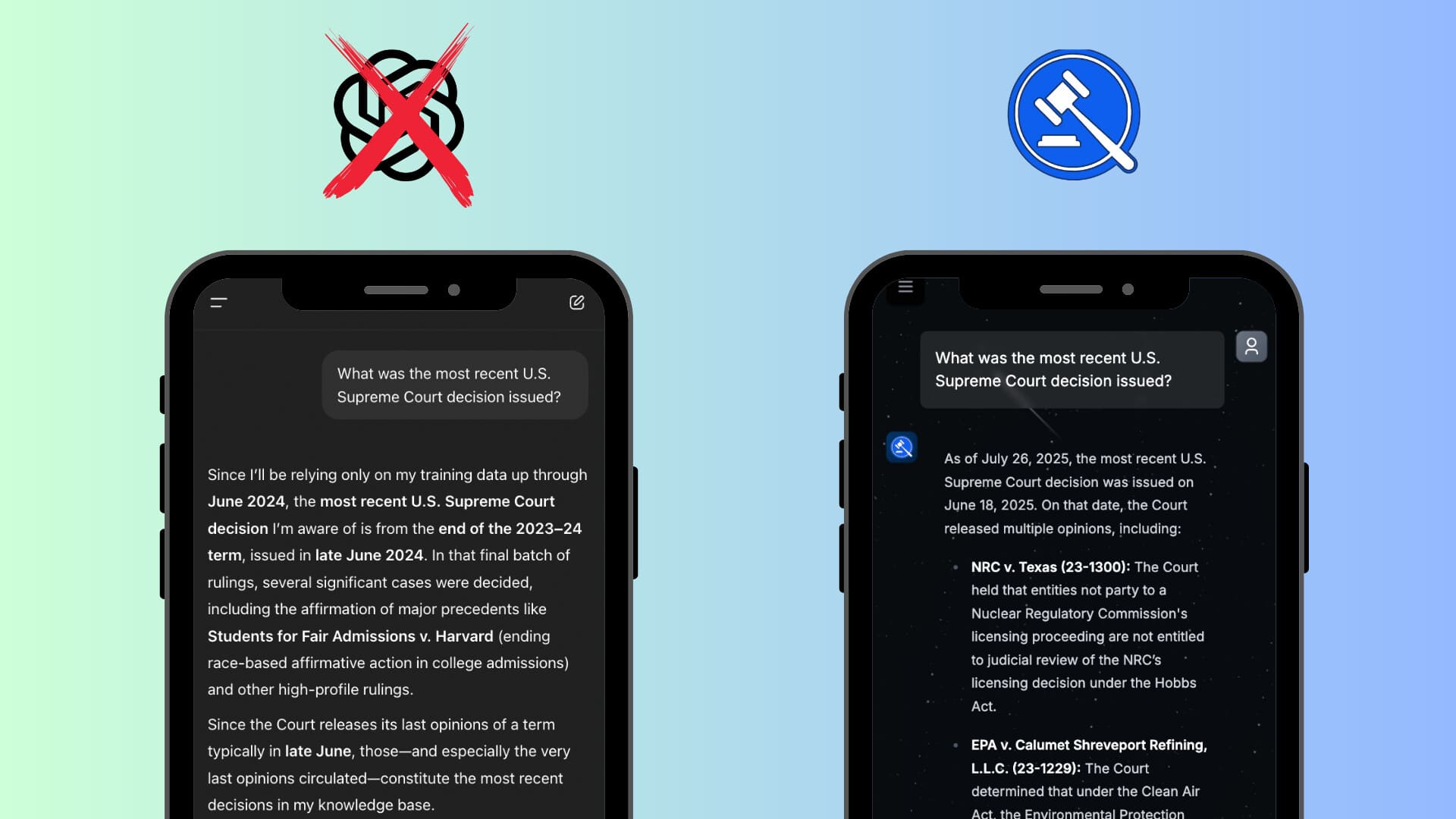

Specialized Legal AI vs. ChatGPT

Not all AI is created equal when it comes to legal questions. Specialized legal AI tools like LegesGPT are designed specifically for legal research and differ from ChatGPT in important ways:

| Feature | ChatGPT | Specialized Legal AI |

|---|---|---|

| Citation verification | No - frequently hallucinates | Yes - citations linked to real sources |

| Jurisdiction awareness | Limited | Built-in multi-jurisdiction support |

| Legal database access | No direct access | Connected to case law and statutes |

| Training focus | General knowledge | Legal documents and reasoning |

| Source transparency | Often unclear | Citations and sources provided |

| Update frequency | Periodic training cutoffs | Regular legal database updates |

The key difference: specialized legal AI tools verify their outputs against actual legal databases. When LegesGPT cites a case, that case exists and says what the AI claims it says.

When to Use AI for Legal Questions

Here's a practical framework for deciding when AI can help—and when you need human expertise:

AI Can Help When:

- You need to understand basic legal concepts or terminology

- You want a plain-language summary of a document before deeper review

- You're doing initial research to understand what questions to ask

- The stakes are low and you're looking for general information

- You're using specialized legal AI with verified citations

You Need a Lawyer When:

- You're facing litigation or legal proceedings

- Significant money or assets are at stake

- Criminal charges are involved

- You need to sign important contracts (employment, business, real estate)

- You're dealing with family law matters (divorce, custody, adoption)

- Immigration issues

- You need advice specific to your jurisdiction and circumstances

- The other side has legal representation

The Hybrid Approach

Many people find the best approach combines AI tools with human expertise:

- Use AI to understand the basics and formulate questions

- Use specialized legal AI to research relevant cases and statutes

- Consult a lawyer for advice specific to your situation

- Use AI to help understand and organize information your lawyer provides

This approach maximizes efficiency while ensuring you get reliable, jurisdiction-specific advice when it matters.

What About Free Legal Advice?

Many people turn to ChatGPT because they can't afford a lawyer. If cost is a concern, consider these alternatives:

- Legal aid organizations: Provide free help to qualifying individuals

- Bar association referral services: Often offer low-cost initial consultations

- Law school clinics: Supervised law students provide free assistance

- Pro bono programs: Many lawyers take cases for free

- Specialized legal AI: Tools like LegesGPT offer affordable access with verified legal information

These options provide more reliable guidance than a general-purpose chatbot.

The Bottom Line

ChatGPT is a remarkable technology, but it's not a lawyer—and it shouldn't be treated as one.

For understanding basic legal concepts, getting plain-language explanations, or starting your research, ChatGPT can be a useful tool. But for actual legal advice—guidance about your specific situation, in your specific jurisdiction, with real consequences—you need either a licensed attorney or specialized legal AI that verifies its outputs against real legal sources.

The lawyers sanctioned for using ChatGPT's fake citations learned this lesson the hard way. Don't make the same mistake. When legal matters are at stake, verify everything, use purpose-built legal tools, and consult professionals when the stakes are high.

Your legal rights are too important to trust to an AI that might be making things up.